1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

|

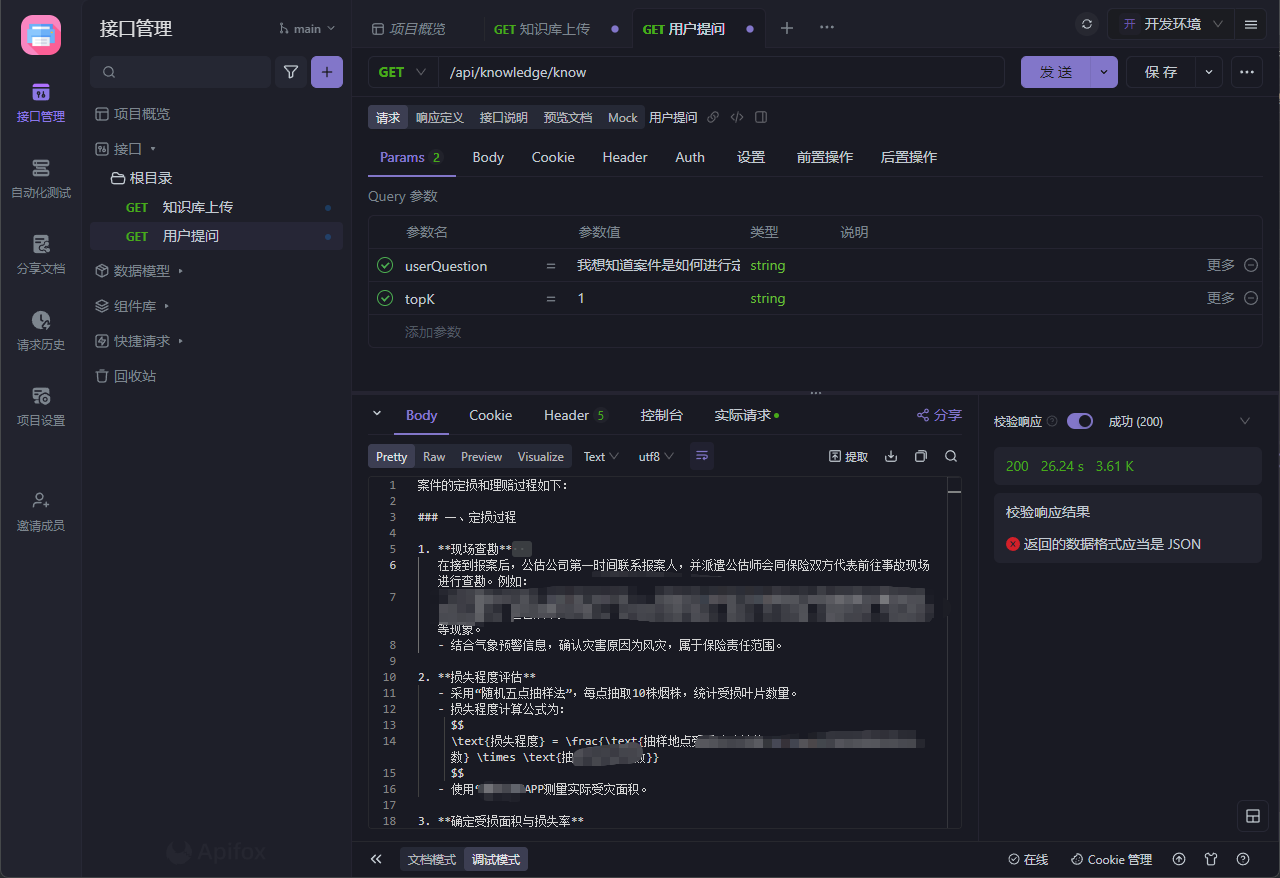

@Service

@AllArgsConstructor

@Slf4j

public class FileUploadService {

private final VectorService vectorService;

private final EmbeddingModel embeddingModel;

private final DocumentParser documentParser;

/**

* 处理用户上传的文件,生成向量并插入ES

* @param file 用户上传的文件(MultipartFile)

* @param userId 上传用户ID(用于多用户隔离)

* @return 插入成功的文档数量

*/

public int handleUploadedFile(MultipartFile file, String userId) {

try {

// 步骤1:解析文件(根据后缀名选择解析器,如PDF用PDFBox,DOCX用POI)

String fileName = file.getOriginalFilename();

List<Document> fileContents = documentParser.parse(file.getInputStream(), fileName);

// 步骤2:文本分割(避免单段文本过长,影响向量质量和检索精度)1200token/段,重叠350

TokenTextSplitter splitter = new TokenTextSplitter(1200,

350, 5,

100, true);

List<Document> splitDocs = splitter.split(fileContents);

// 步骤3:生成向量文档列表(给每个分割后的文本生成向量)

List<VectorDocument> vectorDocuments = splitDocs.stream()

.map(text -> {

VectorDocument doc = new VectorDocument();

doc.setId(UUID.randomUUID().toString());

doc.setTitle(fileName);

doc.setContent(text.getText());

// 步骤4:生成向量(核心!必须在插入前赋值)

float[] embed = embeddingModel.embed(text);

log.info("嵌入模型生成的向量维度:{}", embed.length);

doc.setVector(embed);

return doc;

})

.collect(Collectors.toList());

// 步骤5:批量插入向量文档(调用你现有的 bulkInsertDocuments 方法)

boolean isSuccess = vectorService.bulkInsertDocuments(vectorDocuments);

if (isSuccess){

log.info("用户 {} 上传文件 {} 处理完成,插入 {} 个向量文档", userId, fileName, vectorDocuments.size());

}

return vectorDocuments.size();

} catch (Exception e) {

log.error("处理用户 {} 上传文件失败", userId, e);

throw new RuntimeException("文件导入知识库失败", e);

}

}

}

|